Create Dataproc Cluster, submit Hive job and delete cluster using gcloud command

Contents

Cloud Dataproc in Google Cloud Platform

Cloud Dataproc is Google’s managed service for running Hadoop and Spark jobs. It allows us to create and manage the clusters easily and we can turn them off if we are not using them, to reduce the costs. It provides open source data tools(Hadoop, Spark, Hive, Pig,.etc) for batch processing, querying, streaming, and machine learning.

In this tutorial, we are going to do the following steps using gcloud command

- Create Dataproc cluster

- Submit Hive job

- Delete the Dataproc cluster

Create Dataproc cluster using gcloud command

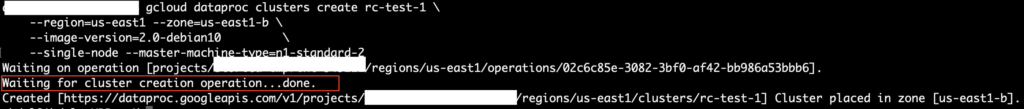

The command gcloud dataproc clusters create is creates the dataproc cluster in GCP. Let’s add the few arguments in that command to specify the cluster specification

|

1 2 3 4 |

gcloud dataproc clusters create rc-test-1 \ --region=us-east1 --zone=us-east1-b \ --image-version=2.0-debian10 \ --single-node --master-machine-type=n1-standard-2 |

We are creating the single node cluster with the name of rc-test-1 in the region us-east1. The image version is used to bundle the operating system, big data components, GCP connectors into one package that is deployed on a cluster.

We are running this command in the machine where Google cloud SDK is configured. The Dataproc cluster rc-test-1 is created successfully in GCP.

We can see the cluster details in the Google cloud console also.

Submit Hive Job to the dataproc cluster

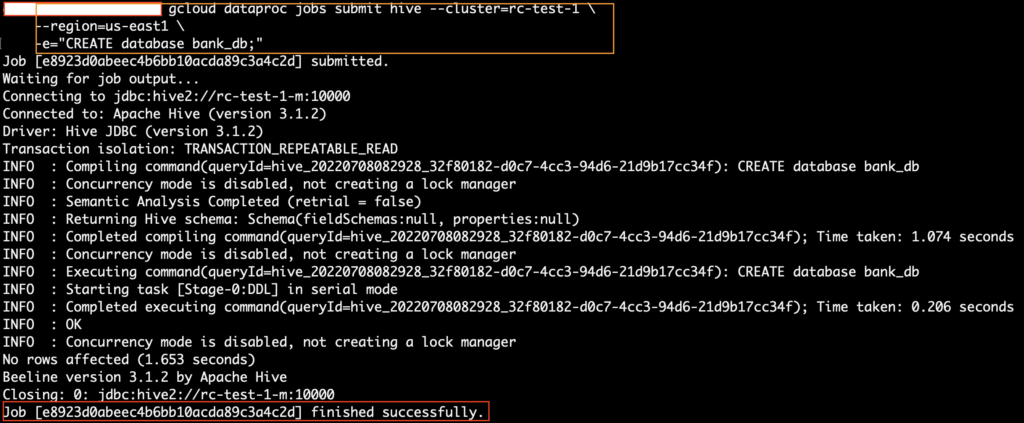

In this step, we are going to create a new database in Hive. Let’s create the database bank_db using gcloud command.

|

1 2 3 |

gcloud dataproc jobs submit hive --cluster=rc-test-1 \ --region=us-east1 \ -e="CREATE database bank_db;" |

The command gcloud dataproc jobs submit hive is submit a Hive job to the cluster. The cluster and region details are mentioned to submit the job in specific cluster. To submit the Hive job with inline queries, we have given the -e argument with query string.

The hive job has been submitted successfully and it created the new Hive database bank_db in the dataproc cluster rc-test-1.

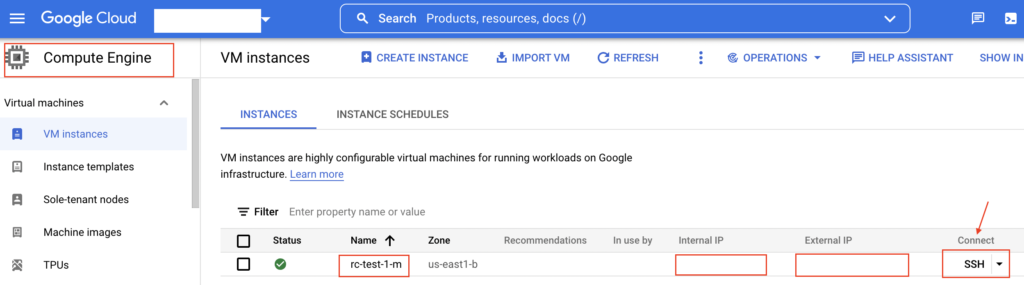

Let’s check the hive database in the cluster. First we need to get into VM instances as below.

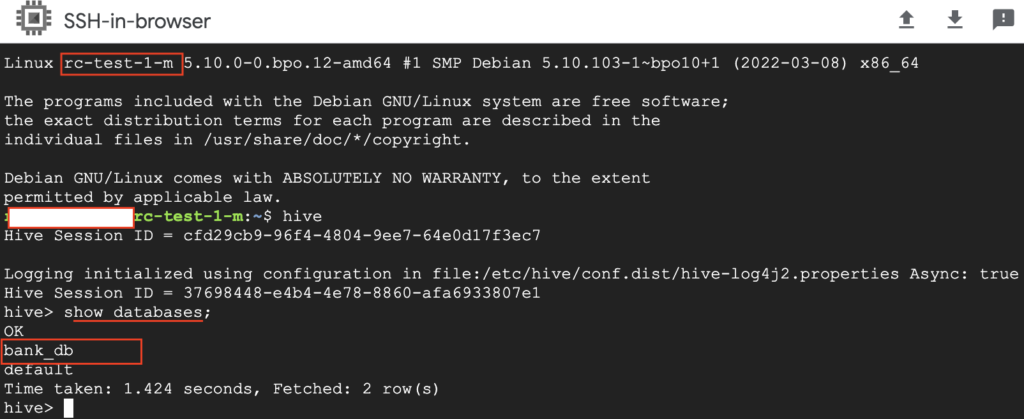

We connected to the machine using ssh and opened in the browser window. Using Hive CLI, we can see the database bank_db as below.

Submit multiple inline queries as Hive Job

In the previous example, we have passed the single inline query to create the database. Similarly we can run the multiple Hive queries using gcloud command. For that, we just need to pass the queries with the delimiter semi colon(;).

|

1 2 3 4 |

gcloud dataproc jobs submit hive --cluster=rc-test-1 \ --region=us-east1 \ -e="CREATE table bank_db.customer_details(cust_id int,name string); CREATE table bank_db.transaction(txn_id int,amt int);" &> result.txt |

To redirect the query results including stdout and stderr to the file, we have used &> results.txt in gcloud command. If we want to redirect stderr alone, we need to use 2> results.txt.

Submit Hive queries from file

In production environment , we usually run the queries from file. To do so, we can use the hive -f option in the gcloud command.

|

1 2 3 |

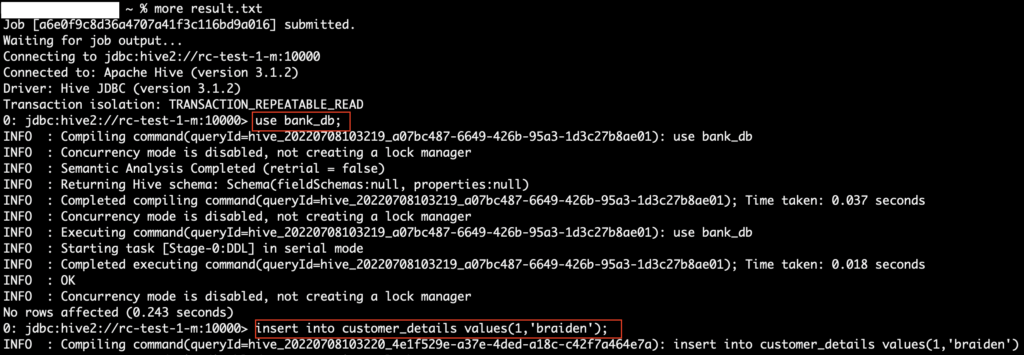

gcloud dataproc jobs submit hive --region=us-east1 \ --cluster=rc-test-1 \ -f hive-test-queries.q &> result.txt |

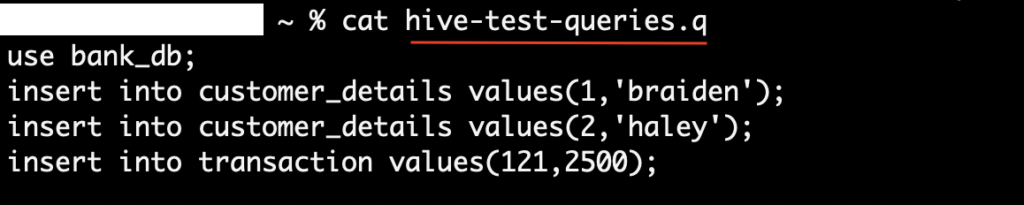

The file hive-test-queries.q has list of queries which will be executed as Hive job.

The gcloud command has executed successfully. The query output can be verified using the results.txt file which we given in the command. As we shown below, the Hive queries are executed sequentially.

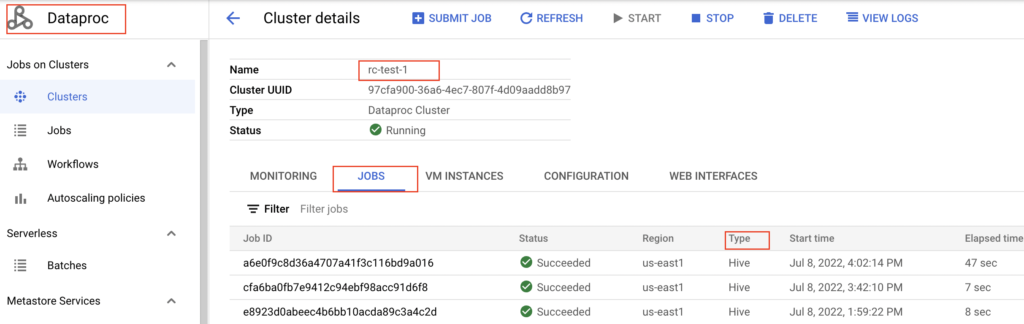

Verify the Hive jobs in Dataproc cluster

We can check the Hive jobs status in Dataproc cluster. As we can see below, the jobs are succeeded.

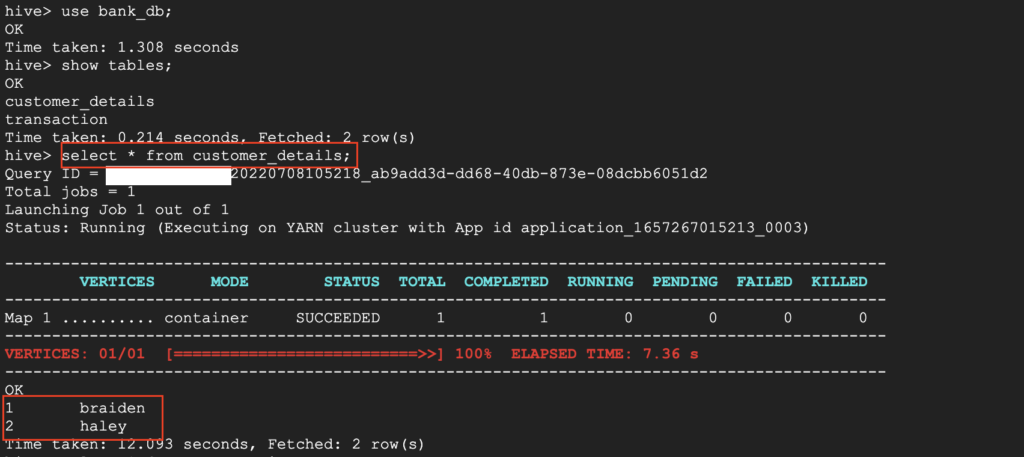

Let’s verify the Hive tables using SSH in browser option in Google Cloud Console. The newly created table has the records as per our insert queries.

Hive Table : customer_details

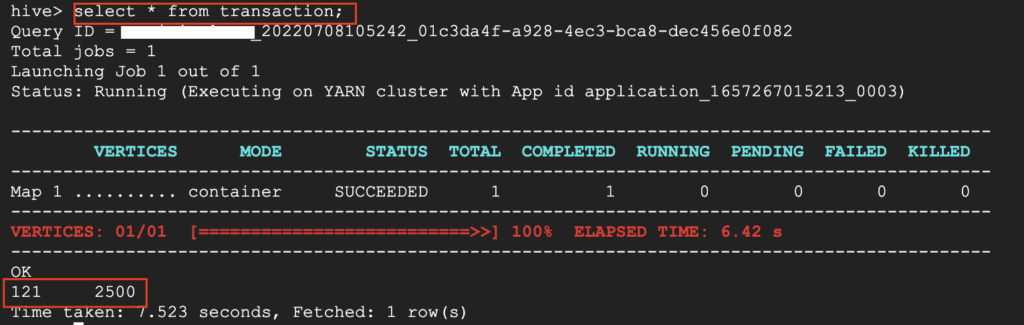

Hive table : transaction

Delete the Dataproc cluster

Once the jobs are completed, we can delete the dataproc cluster using gcloud command. The command gcloud dataproc clusters delete is used to delete the cluster. Please note that it will delete all the objects including our Hive tables.

|

1 2 |

gcloud dataproc clusters delete rc-test-1 \ --region=us-east1 |

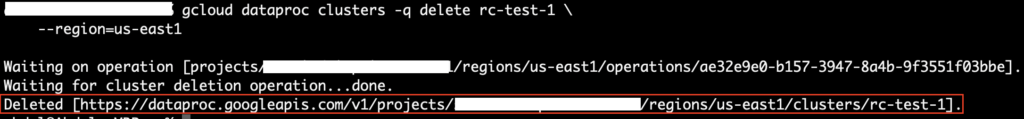

The cluser name rc-test-1 and region of that cluster us-east1 are mentioned in the command. We tried to execute the command. But it is asking confirmation to delete the cluster as below.

We want to delete the cluster without prompt. For that, we need to specify –quiet or -q on the delete command. Let’s do that

|

1 2 |

gcloud dataproc clusters -q delete rc-test-1 \ --region=us-east1 |

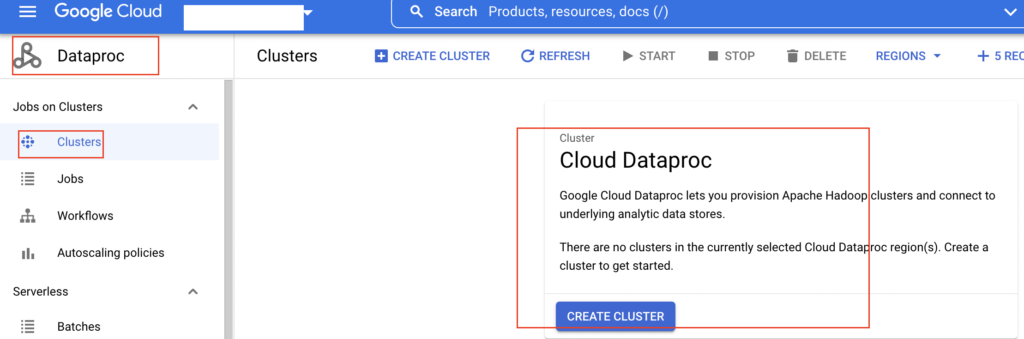

Finally the dataproc cluster rc-test-1 got deleted from the Google Cloud Platform. We can verify the same in Google Cloud Console also.

Recommended Articles

- Create a Hive External table on Google Cloud Storage(GCS)

- Hive -e command with examples

- How to run one or more hive queries in a file using hive -f command

References from GCP Official documentation