How to execute a Select query on BigQuery using Java?

Contents

- 1 BigQuery

- 1.1 BigQuery Job

- 1.2 Prerequisite to run a BigQuery job using Java

- 1.3 Java program to execute a Select query on BigQuery:

- 1.3.1 Step 1: Set the client libraries in pom.xml

- 1.3.2 Step 2 : Import the Google Cloud BigQuery libraries in the program

- 1.3.3 Step 3 : Set the Service Account key credential

- 1.3.4 Step 4 : Initialize a BigQuery client

- 1.3.5 Step 5 : Define the query with a QueryJobConfiguration

- 1.3.6 Step 6 : Start a Bigquery job

- 1.3.7 Step 7 : Wait for the query to complete

- 1.3.8 Step 8 : Check the errors

- 1.3.9 Step 9 : Get the results

- 1.3.10 Step 10 : Print the results

- 1.4 Complete program

BigQuery

BigQuery is a fully managed and serverless datawarehouse system. It is part of Google Cloud Platform. It can process the massive amounts of data and provide the result quickly. In this tutorial, we are going to read the tables from BigQuery dataset using Java program. For this example, we are using the below SELECT query.

|

1 2 3 4 |

SELECT commit, author, repo_name FROM `bigquery-public-data.github_repos.commits` WHERE subject like '%bigquery%' ORDER BY subject DESC LIMIT 10 |

BigQuery Job

The unit of work in BigQuery is called as job. It can be load data, export data, query data, or copy data. If the job is created programmatically, BigQuery execute it asynchronously and can be polled for the status.

Prerequisite to run a BigQuery job using Java

- Project Id : To use the Google Cloud Platform, we need to create a project in GCP. Then GCP gives the project id which is a unique string used to differentiate our project from all others in Google Cloud.

- Service Account : A service account is a Google Account that is associated with our Google Cloud project. To access the BigQuery API using program/application, we need to create a service account. Then to access our project, we need to grant the certain roles to that service account.

- Service Account Key: For the service account, we need to create a key which is used in the program as a service account credential. BigQuery verify the client’s identity using the service account key.

Java program to execute a Select query on BigQuery:

To access the BigQuery, we need to install Google Cloud BigQuery Client libraries in our program. So we are creating the maven project as below.

Step 1: Set the client libraries in pom.xml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

<!-- Using libraries-bom to manage versions. See https://github.com/GoogleCloudPlatform/cloud-opensource-java/wiki/The-Google-Cloud-Platform-Libraries-BOM --> <dependencyManagement> <dependencies> <dependency> <groupId>com.google.cloud</groupId> <artifactId>libraries-bom</artifactId> <version>25.2.0</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement> <dependencies> <dependency> <groupId>com.google.cloud</groupId> <artifactId>google-cloud-bigquery</artifactId> </dependency> </dependencies> |

Step 2 : Import the Google Cloud BigQuery libraries in the program

|

1 2 3 4 5 6 7 8 9 |

import com.google.cloud.bigquery.BigQuery; import com.google.cloud.bigquery.BigQueryOptions; import com.google.cloud.bigquery.FieldValueList; import com.google.cloud.bigquery.Job; import com.google.cloud.bigquery.JobId; import com.google.cloud.bigquery.JobInfo; import com.google.cloud.bigquery.QueryJobConfiguration; import com.google.cloud.bigquery.TableResult; import java.util.UUID; |

Step 3 : Set the Service Account key credential

As we mentioned earlier, key should be created for the service account. From GCP console, we can download that key in json file format. The same json file is used in the program to set the credential. The path of the json file is given in the code.

|

1 2 3 4 5 6 |

// Path of the Service Account key file String jsonPath = "D:\\Learning\\GCP\\my-rcs-project-833123-ef45632b1b12.json"; //Set the Service account key as credential Credentials credentials = GoogleCredentials .fromStream(new FileInputStream(jsonPath)); |

Step 4 : Initialize a BigQuery client

In this step, we are initializing the BigQuery client using our project id and service account credential.

|

1 2 3 4 5 |

//Initialize BigQuery Client by setting project id and credential BigQuery bigquery = BigQueryOptions.newBuilder() .setProjectId("my-rcs-project-833123") .setCredentials(credentials) .build().getService(); |

Step 5 : Define the query with a QueryJobConfiguration

Next we need to define our query in the QueryJobConfiguration as below. In this example, we are querying from bigquery public dataset bigquery-public-data.github_repos.commits .

|

1 2 3 4 5 6 7 |

QueryJobConfiguration queryConfig = QueryJobConfiguration.newBuilder( "SELECT commit, author, repo_name " + "FROM `bigquery-public-data.github_repos.commits` " + "WHERE subject like '%bigquery%' " + "ORDER BY subject DESC LIMIT 10") .build(); |

Step 6 : Start a Bigquery job

In this step, we are creating a job id and starting the BigQuery job with the BigQuery.create() method. The QueryJobConfiguration is passed to this method.

|

1 2 3 |

// Create a job ID so that we can safely retry. JobId jobId = JobId.of(UUID.randomUUID().toString()); Job queryJob = bigquery.create(JobInfo.newBuilder(queryConfig).setJobId(jobId).build()); |

Step 7 : Wait for the query to complete

|

1 2 |

// Wait for the query to complete. queryJob = queryJob.waitFor(); |

Step 8 : Check the errors

The below code used to check the errors in the BigQuery job.

|

1 2 3 4 5 6 7 8 |

// Check for errors if (queryJob == null) { throw new RuntimeException("Job no longer exists"); } else if (queryJob.getStatus().getError() != null) { // You can also look at queryJob.getStatus().getExecutionErrors() for all // errors, not just the latest one. throw new RuntimeException(queryJob.getStatus().getError().toString()); } |

Step 9 : Get the results

|

1 2 |

// Get the results. TableResult result = queryJob.getQueryResults(); |

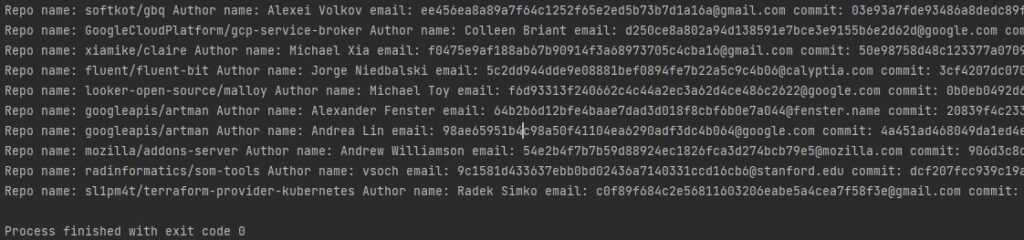

Step 10 : Print the results

Here we are iterating the each row and printing the same in the output screen.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

// Print all pages of the results. for (FieldValueList row : result.iterateAll()) { // String type String commit = row.get("commit").getStringValue(); // Record type FieldValueList author = row.get("author").getRecordValue(); String name = author.get("name").getStringValue(); String email = author.get("email").getStringValue(); // String Repeated type String repoName = row.get("repo_name").getRecordValue().get(0).getStringValue(); System.out.printf( "Repo name: %s Author name: %s email: %s commit: %s\n", repoName, name, email, commit); } |

Complete program

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 |

import com.google.api.client.util.Lists; import com.google.auth.Credentials; import com.google.auth.oauth2.GoogleCredentials; import com.google.cloud.bigquery.BigQuery; import com.google.cloud.bigquery.BigQueryOptions; import com.google.cloud.bigquery.FieldValueList; import com.google.cloud.bigquery.Job; import com.google.cloud.bigquery.JobId; import com.google.cloud.bigquery.JobInfo; import com.google.cloud.bigquery.QueryJobConfiguration; import com.google.cloud.bigquery.TableResult; import java.io.FileInputStream; import java.io.FileNotFoundException; import java.io.IOException; import java.util.UUID; public class QueryManager { public static void main(String[] args) throws InterruptedException, IOException { // Path of the Service Account key file String jsonPath = "D:\\Learning\\GCP\\my-rcs-project-833123-ef45632b1b12.json"; //Set the Service account key as credential Credentials credentials = GoogleCredentials .fromStream(new FileInputStream(jsonPath)); //Initialize BigQuery Client by setting project id and credential BigQuery bigquery = BigQueryOptions.newBuilder() .setProjectId("my-rcs-project-833123") .setCredentials(credentials) .build().getService(); // Define the query with a QueryJobConfiguration QueryJobConfiguration queryConfig = QueryJobConfiguration.newBuilder( "SELECT commit, author, repo_name " + "FROM `bigquery-public-data.github_repos.commits` " + "WHERE subject like '%bigquery%' " + "ORDER BY subject DESC LIMIT 10") .build(); // Create a job ID so that we can safely retry. JobId jobId = JobId.of(UUID.randomUUID().toString()); Job queryJob = bigquery.create(JobInfo.newBuilder(queryConfig).setJobId(jobId).build()); // Wait for the query to complete. queryJob = queryJob.waitFor(); // Check for errors if (queryJob == null) { throw new RuntimeException("Job no longer exists"); } else if (queryJob.getStatus().getError() != null) { // You can also look at queryJob.getStatus().getExecutionErrors() for all // errors, not just the latest one. throw new RuntimeException(queryJob.getStatus().getError().toString()); } // Get the results. TableResult result = queryJob.getQueryResults(); // Print all pages of the results. for (FieldValueList row : result.iterateAll()) { // String type String commit = row.get("commit").getStringValue(); // Record type FieldValueList author = row.get("author").getRecordValue(); String name = author.get("name").getStringValue(); String email = author.get("email").getStringValue(); // String Repeated type String repoName = row.get("repo_name").getRecordValue().get(0).getStringValue(); System.out.printf( "Repo name: %s Author name: %s email: %s commit: %s\n", repoName, name, email, commit); } } } |

Output

Finally we executed our Java program to read the data from BigQuery dataset bigquery-public-data.github_repos.commits. As we shown below, the program has printed the results.

Output in Google cloud console

Also we have verified the results of the select query in the Google cloud console. It looks good.

Recommended Articles

- How to export data from BigQuery table to a file in Cloud Storage?

- How to run a BigQuery SQL using Python?

References